Winutils Exe Hadoop For Mac

I followed the directions here but spark still comes up with error: 'Could not locate executable C: hadoop bin winutils.exe in the hadoop binaries'. I have the right. Winutils Exe Hadoop Download Vm. (MAC) andthey need this. Could not locate executable null bin winutils.exe in the Hadoop binaries.

I followed the directions here but spark still comes up with error: 'Could not locate executable C: hadoop bin winutils.exe in the hadoop binaries'. I have the right.

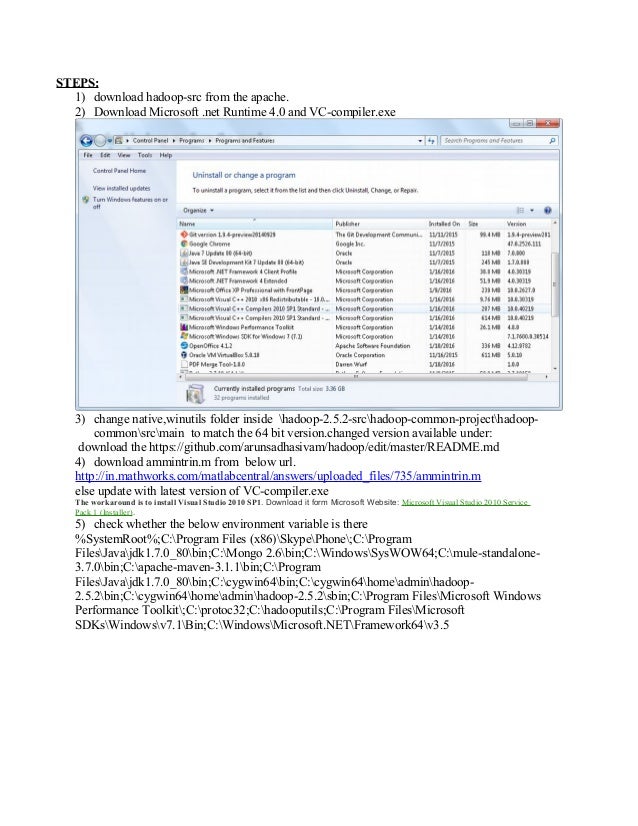

Good news for Hadoop developers who want to use Microsoft Windows OS for their development activities. Finally Apache Hadoop 2.2.0 release officially supports for running Hadoop on Microsoft Windows as well. But the bin distribution of Apache Hadoop 2.2.0 release does not contain some windows native components (like winutils.exe, hadoop.dll etc). As a result, if we try to run Hadoop in windows, we'll encounter. In this article, I'll describe how to build bin native distribution from source codes, install, configure and run Hadoop in Windows Platform. Download and extract to a folder having short path (say c: hdfs) to avoid runtime problem due to maximum path length limitation in Windows. Select Start - All Programs - Microsoft Windows SDK v7.1 and open Windows SDK 7.1 Command Prompt.

Change directory to Hadoop source code folder ( c: hdfs). Execute mvn package with options -Pdist,native-win -DskipTests -Dtar to create Windows binary tar distribution. Windows SDK 7.1 Command Prompt Setting SDK environment relative to C: Program Files Microsoft SDKs Windows v7.1. Targeting Windows 7 x64 Debug C: Program Files Microsoft SDKs Windows v7.1cd c: hdfs C: hdfsmvn package -Pdist,native-win -DskipTests -Dtar INFO Scanning for projects.

Note: I have pasted only the starting few lines of huge logs generated by maven. This building step requires Internet connection as Maven will download all the required dependencies. If everything goes well in the previous step, then native distribution hadoop-2.2.0.tar.gz will be created inside C: hdfs hadoop-dist target hadoop-2.2.0 directory. Install Hadoop. Extract hadoop-2.2.0.tar.gz to a folder (say c: hadoop).

Add Environment Variable HADOOPHOME and edit Path Variable to add bin directory of HADOOPHOME (say C: hadoop bin). Add Environment Variables.

Configure Hadoop Make following changes to configure Hadoop. File: C: hadoop etc hadoop core-site.xml

See accompanying LICENSE file.

A URI whose scheme and authority determine the FileSystem implementation. The uri's scheme determines the config property (fs.SCHEME.impl) naming the FileSystem implementation class.

The uri's authority is used to determine the host, port, etc. For a filesystem. File: C: hadoop etc hadoop hdfs-site.xml

See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file.

Dfs.replication: Default block replication. The actual number of replications can be specified when the file is created.

The default is used if replication is not specified in create time. Dfs.namenode.name.dir: Determines where on the local filesystem the DFS name node should store the name table(fsimage). If this is a comma-delimited list of directories then the name table is replicated in all of the directories, for redundancy. Dfs.datanode.data.dir: Determines where on the local filesystem an DFS data node should store its blocks. If this is a comma-delimited list of directories, then data will be stored in all named directories, typically on different devices.

Directories that do not exist are ignored. Note: Create namenode and datanode directory under c:/hadoop/data/dfs/. File: C: hadoop etc hadoop yarn-site.xml

See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. yarn.nodemanager.aux-services mapreduceshuffle yarn.nodemanager.aux-services.mapreduce.shuffle.class org.apache.hadoop.mapred.ShuffleHandler yarn.application.classpath%HADOOPHOME% etc hadoop,%HADOOPHOME% share hadoop common.,%HADOOPHOME% share hadoop common lib.,%HADOOPHOME% share hadoop mapreduce.,%HADOOPHOME% share hadoop mapreduce lib.,%HADOOPHOME% share hadoop hdfs.,%HADOOPHOME% share hadoop hdfs lib.,%HADOOPHOME% share hadoop yarn.,%HADOOPHOME% share hadoop yarn lib. Yarn.nodemanager.aux-services: The auxiliary service name. Default value is omapreduceshuffle yarn.nodemanager.aux-services.mapreduce.shuffle.class: The auxiliary service class to use. Default value is org.apache.hadoop.mapred.ShuffleHandler yarn.application.classpath: CLASSPATH for YARN applications. A comma-separated list of CLASSPATH entries.

File: C: hadoop etc hadoop mapred-site.xml

Mapreduce.framework.name: The runtime framework for executing MapReduce jobs. Can be one of local, classic or yarn.

Format namenode For the first time only, namenode needs to be formatted. Command Prompt Microsoft Windows Version 6.1.7601 Copyright (c) 2009 Microsoft Corporation. All rights reserved.

Winutils Public D R

Run wordcount MapReduce job Follow the post Stop HDFS & MapReduce Command Prompt C: Users abhijitgcd c: hadoop sbin c: hadoop sbinstop-dfs SUCCESS: Sent termination signal to the process with PID 876. SUCCESS: Sent termination signal to the process with PID 3848. C: hadoop sbinstop-yarn stopping yarn daemons SUCCESS: Sent termination signal to the process with PID 5920. SUCCESS: Sent termination signal to the process with PID 7612. INFO: No tasks running with the specified criteria. Download SrcCodes.

10:02:13,492 main ERROR Shell: Failed to locate the winutils binary in the hadoop binary path java.io.IOException: Could not locate executable null bin winutils.exe in the Hadoop binaries.

Comments are closed.